August 2012

Defending the Qualitative Approach against the Quantitative Obsession

25.08.12 - 10:43 - Filed in: Software Testing

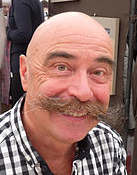

image credit: http://j.mp/NsbeVj

Some years ago I studied Sociology and General Linguistics at the University of Zurich. That was before my time as a software tester and I enjoyed Sociology a lot. Well, at least part of it. Quite interestingly, Sociology and - in my observation - many of the human sciences, display a minority complex towards the so called hard sciences such as Physics.

This leads to a sad obsession. Make everything quantifiable.

But I was much more interested in qualitative studies. There is a brilliant sociologist from France - Jean-Claude Kaufmann - who studied and described many of the deeply human activities and behaviors. For instance, how men behave on beaches where women do topless sunbathing. The book: Corps de femmes, regards d’hommes - La sociologie des seins nus (women’s bodies, mens looks - the sociology of naked breasts). A fascinating read!

Also, one of my lesser known heuristics is, that men with extravagant mustaches are interesting people who have captivating stories to tell. Jean-Claude Kaufman has an extravagant mustache. Judge yourself:

On the other hand, I have always found that quantitative Sociology has only boring stories to tell. Its findings tended to be things that everybody already knew. There is hardly any discovery. Not even mentioning the fact, that the whole complex of validity of what has been found out through measurement, leaves some questions open. Measurements often give a false sense of certainty.

Our good old friend Availability Bias enters the scene: "if you can think of it, it must be important”. And we are already deep in the domain of software testing.

It is not difficult to count something and put that counting result into relation to something else. And - hey! - we are already 50.4576% done. Only that this has no relation to any relevant reality. It is utter nonsense. It is dwelling in fantastic la-la-land. And our users couldn’t care less about 50.4576%. They want a joyful experience while using our application.

Peter Drucker - the famous management man - once said: “If you can’t measure it, you can’t manage it”. A simple sentence that stuck in the simple brains of too many simple contemporary managers. That statement is of course not true, as every parent on earth knows from empirical experience. You do not quantitatively bring up a child. You don’t draw progress charts. You tell stories and pass your time playing and laughing.

But because many managers are too busy collecting meaningless data, they no longer find time to read books and have missed that Peter Drucker later in his life had serious doubts about his initial statement. It is not only us testers who suffer from that lamentable laziness.

I’d rather go with Albert Einstein instead: “Not everything that can be counted counts, and not everything that counts can be counted.”

Eating my own Dog Food and thereby Wandering off from Time to Time

16.08.12 - 14:38 - Filed in: Software Testing

image credit: http://j.mp/M6d8JY

Some time ago I posted a set of questions. Some of my fellow testers posted their answers. Some of them were really good. Let’s see what my own reasoning is.

1. Is there a moment when asking questions becomes counter-productive?

a) If yes, when exactly and what does happen then?

b) If no, how do you know?

This immediately triggers a follow-up question. What do I mean by ‘counter-produtive’? A productive outcome - after having asked a question - would be: 1. the question resonates with the receiver and 2. generates an answer that helps the questioner to reduce uncertainty within the context of the question asked.

By ‘resonate’ I mean the receivers willingness to give you an answer. By ‘reduce uncertainty’ I also include an answers like ‘I don’t know’ or ‘ask somebody else’.

A counter-productive question either produces a failure with the former or the latter or both. In order for a question to resonate, the receiver needs to be ready to listen to you. If the receiver is in a mental state which incompatible with your need for an answer, the question becomes counter-productive. There is no use in asking anything if the receiver is stressed, does not feel competent or if the receiver is in an annoyed state of mind.

Some questions produce surprising answers. It may not be what you expected. Given that condition 1. is met, you should continue with refining your question until a productive answer is given.

In my perception the context-driven crowd describes itself as above average smart. This can result in asking questions just for the sake of it. It can be observed regularly on e.g. Twitter. I am guilty of it myself. I think we should exercise good judgment when asking questions. Am I just asking because I like my über-smart question, or do I really want to know?

2. Does puzzle solving make you a better tester?

a) If yes, what exactly is the mechanism?

b) If no, is the effect neutral or negative?

My answer to this question is: I don’t know. It could be the other way round. Good testers just like to exercise their brains and therefore like to solve puzzles more than others. Deliberate practice results in mastery.

Now, a good puzzle often asks for lateral thinking skills. Lateral thinking skills are good for general problem solving. And there is a lot of general problem solving in testing. Hence, exercising on puzzles might help to become a better tester.

3. Should we bash certified testers who are proud of their certifications?

a) If yes, what do we want to achieve with that action?

b) If no, why do we let these people spread ideas about bad testing?

No, we should not. There are many reasons why somebody has a certification and maybe it was hard work to obtain it and the hard work result in the tester being proud of his/her achievement. Also, attacking people hardens the relationship and certainly does not change the opinion of anybody.

What we should put our energy in, is, in the following order: 1. set a good example ourselves by demonstrating what good testing is 2. argue against the certification and the certification providers. The certification industry is driven by monetary ambitions and not by the urge to enhance testers’ skills. That is what we should point out. We should not let the certification industry spread bad ideas about testing.

I very much believe in nurturing positive alternatives. Let us show what good testing is and let us concentrate on building a valid alternative to certification. A tester generally has two possible paths: Be employed or be independent.

When a company hires a tester, they want to know if he or she can do the job. Current certification schemes not being an option, then how exactly do we meet that need? Peer certification? A general ‘reputation score’? How? I do not have a good answer to that.

4. Is having a high intelligence level a prerequisite for being a good context-driven tester?

a) If yes, what definition of intelligence is applicable?

b) If no, how can it be substituted and by what?

Software testing belongs to the knowledge worker domain. The acquisition of knowledge depends on your ability to do so. The Cattell–Horn–Carroll theory lists ten general areas of intelligence:

- Crystallized Intelligence

- Fluid Intelligence

- Quantitative Reasoning

- Reading & Writing Ability

- Short-Term Memory

- Long-Term Storage and Retrieval

- Visual Processing

- Auditory Processing

- Processing Speed

- Decision/Reaction Time/Speed

If you go through this list, you will probably agree that all are applicable to software testing to some extent. The better you are in each of these dimensions, the better your testing will be.

5. Is it true that many tester struggle with what a heuristic and an oracle are?

a) If yes, what is your explanation that it is so?

b) If no, where is your data?

What is an apple? It is a fruit that grows on trees. It is round-ish, edible and has either a green, yellow or red color or a mixture of these. And here is one. Have a bite.

Some things are easer to understand and to explain to others. Other things - and especially concepts - are more difficult. Generally, the more abstract a concept, the more difficulty people have with understanding it.

So, yes, heuristic and oracle are abstract concepts and therefore more people struggle with understanding what they are than with understanding what an apple is.

6. Can YOU give a quick explanation to somebody who doesn’t understand the concept?

a) If yes, how do you know you were understood?

b) If no, what part are you struggling with?

Ahem, let’s try a definition without referring to the existing ones of e.g. Michael Bolton:

Heuristic: A problem solving strategy that produces an answer without guarantee of neither its absolute correctness nor its best suitability nor its applicability for the task one is confronted with.

Oracle: Any valid reference used by a software tester in order to evaluate the observed with the desired.

And now comes the disclaimer: By just giving these one-sentence definitions I would not have any guarantee that I was understood. In order to know that, I would have to be in a dialogue for a longer period and observe if I was really understood. So, no easy and quick path here.

7. Is there a subject/topic that has no relevance whatsoever to the context-driven software tester?

a) If yes, can you give an explanation that entails detailed reasons of its inapplicability?

b) If no, how come?

I do not think so. There is the fantastic power of analogies. You can take any A and B and make a connection through an analogy. In my experience it is very fruitful to sometimes force analogies. When trying to connect two domains that appear to not be connected at all, the outcome can be rather surprising. Deliberately forcing analogies more often than not results in surprising insights and new ideas.