First Year at eBay

Exactly one year has passed since I joined eBay on July 1, 2012, and it has been quite a ride. Overall, the experience has been tremendously positive, mostly because the people I work with are both smart and a pleasure to spend time with.

Since my team is distributed at four different locations in Europe (Zurich, Berlin, Paris, London) and our projects mostly originate in San Jose, California, I am traveling significantly more often than in my previous jobs. For many people, air travel is tiring; I don’t perceive it as such. On the contrary, I enjoy the time on a plane because it allows uninterrupted time to read a book or do some thinking.

My team covers 12 European eBay sites in 7 different languages. In the past year, we have tested roughly 100 projects of features that were rolled out to the European sites. I am impressed by my team’s capability to handle all this.

There are many different approaches to testing within eBay, which often leads to intensive discussions with my colleagues in the US. In many ways, the misunderstandings are not unique to eBay. For some reason, there is a widespread view of dichotomous antagonism between manual and automated testing, whereby automation is regarded by some as a superior form of testing.

In these kind of discussions, I often appear to leave the impression of being against automation. Well, I am not. I am - however - against undirected/unreflected automation. I am against automation for the wrong purpose. I am against automation that is only done “because it is engineering”.

I am fiercely in favor of automation if it helps the team with their testing. I am forcibly in favor of automation, if it does the checking necessary to indicate regression effects. I am emphatically in favor of automation, if it does what automation does best - fast, repetitive checking of facts.

I’d be happy if one day the manual vs. automation discussion was no longer necessary.

Anyway, I am proud that my team does not quarrel with such lack of subtlety. They all have a sound mental model of how to do good testing. What more could I wish for? So, thank you team and everybody else in the organization I am in contact with for the splendid experience so far.

We Attended Rapid Software Testing because we Want to Grow our Testing Skills at eBay

For the second time this year, James Bach came to Switzerland. After having had one of the Keynotes at Swiss Testing Day in March (see the recording here) and spending time with me on solving puzzles, intensive discussions on testing and reviewing Skype coaching transcripts (see my previous post here), it was time for Rapid Software Testing at eBay.

As usual, James arrived in Switzerland a few days earlier in order to re-balance his jet lag. Knowing that James loves the mountains, I just had to show him Jungfraujoch, which is a spectacular ride on the train and also the highest train station in Europe. We walked on ice and worked out a graphical proof of the addition of ascending odd numbers starting with 1 being n^2 for n being the number of odd numbers added. (Send me your solution, if you feel inclined to solve it graphically)

We at eBay International are a team of 13 testers. As we also wanted to connect with other testers, I extended my invitation to RST to other testers within the eBay family. The enthusiasm level was high and we ended up being 24 people from eBay, marktplaats.nl, mobile.de, brands4friends.de, tradera.se, dba.dk and two developers from eBay, who all found it important enough to spend 3 full days on software testing education.

James jumped right into getting our brains to work with an assignment on how to test font sizes in Wordpad. Appears to be a straightforward task until you spend some time thinking about it. What exactly are sizes of fonts? How do you measure them? On paper? On the screen? What screen? Is it even relevant for Wordpad? How relevant? Good testers immediately start with asking questions.

“If you talk about the number of tests without specifying their content, the discussion becomes meaningless” -- James Bach

Many of the exercises and ‘hot seat’ situations exemplified, that software testing is a demanding and intellectually challenging activity. The hot seat situations consisted of one participant being engaged by James into solving a task. This was tough, since James is very skilled at spotting inaccurate thinking. And once he has done so, he is in your face and all over you. One needs to have nerves of steel in order to successfully navigate through the traps set by James; and the eBay testers mostly proved worthy.

As much as one needs good technical understanding in software testing, there are other qualities equally important. An exercise on ‘shallow agreement’ - a state where you believe that you are talking about the same thing - demonstrated, how important the clarification of assumptions is in software testing.

And why can’t you just automate everything? Simple reason: you can only automate what you know (and even in that category, most would be impractical since of the costs involved to automate it would be too high).

In non-human systems there is a fundamental absence of peripheral wisdom, which is what allows collateral observation. Humans are very good at detecting violations of expectations they did not even know they had. Does that sound obscure? Talk to me on Skype (ID: ilarihenrik) and I can show you with an exercise.

I am very happy that we had the opportunity to spend 3 full days on deep and active reflection on software testing. It is important to acknowledge, that software testing consists of more than just writing scripts and fiddling on automation frameworks. A skillful tester chooses his/her tools according to the needs presented by the testing challenge, and not blindly by following some imaginary ‘best practices’.

Two areas I will focus on with my team in the near future: modelling and reporting. Modelling for its necessity to grasp the complexity of a big system like eBay, reporting out of the need to be able to explain what we do. You not only need to be good at testing but also at explaining what you do. A good report should give an accurate impression on what I as a tester did during the period I tested.

I believe it would be a good idea if more executives in large companies attended Rapid Software Testing. It might clarify some of the misguided assumptions on what software testing is all about.

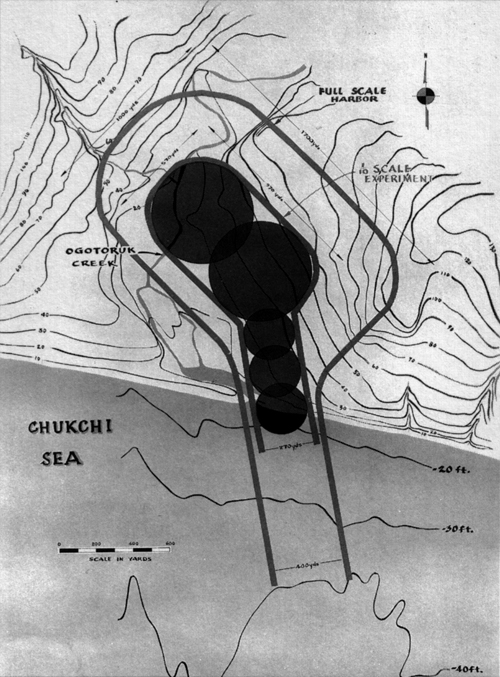

How Operation Plowshare is Only Partially a Good Analogy for the 'Full Automation' Extremists

Back in the sixties, some military dickheads had a good idea. Why not use atomic bombs for engineering purposes? Let’s bomb ourselves a harbor. Let’s get rid of that nasty mountain. Or why not just build a new canal between the pacific and the caribbean sea?

One bomb after the other planted in a row and - hey - he he, it’s automated and we need to do nothing else but pull the trigger. A good name had to be found and what would be more obvious to choose from for the god fearing good Americans than the holy scripture?

And that is how Operation Plowshare was born to henceforth bring the blessings of the modern age to mankind. Sounds good, doesn’t it? Only that quite obviously there are some shortcomings to this kind of project. Radiation might leave the harbor useless, the costs could rise to astronomic proportions. They did not care about things like that and so the project died a silent death somewhere in the seventies."And he shall judge among the nations, and shall rebuke many people: and they shall beat their swords into plowshares, and their spears into pruning hooks: nation shall not lift up sword against nation, neither shall they learn war any more" - Somewhere in the Bible

Just recently I overheard some snippets of a conversation in the cubicle next to mine: “Yeah, just give me the coverage”, “No, I am only interested in the automation part”, and the best of all: “I don’t care”. You don’t care? Really?

Does that sound like Operation Plowshare?

It is the same mindset that takes one advantage (creating a hole quickly/executing a test suite rapidly and repeatedly) and completely disregards any shortcomings (radiation wasteland/untested wasteland). The ‘full automation’ extremists probably might not even have a good mental model of what ‘full’ or ‘complete’ means. Just do it for the sake of it because it is ‘engineering’, right?

But the analogy is only partially valid. Unlike nuking ourselves some harbors, test automation has value. It does have huge value, actually, if it is applied for the right purpose. In some cases, it is enormously helpful for regression testing, but it does not give any ‘guarantee’ for not having messed up.

Automation checks things, and it checks only what has been thought of and might (or might not) catch anticipated bugs. It is a lightweight insurance ticket for a part of the system. And in its execution it is fast (not so much for its development, though). That’s all.

Is it somewhere rooted in the western thinking style that we seem to insist on opposing binary categorization for concepts and ideas? It is either or, not both. It is complete nonsense to think of automation and manual sapient testing as being opposites. They are not.

So, let’s not be military dickheads and instead apply each test automation and manual sapient testing where it is most suitable. Maybe sometimes in the future, the ‘let’s automate the hell out of it’-idea also dies a silent death, much like Operation Plowshare did.

Defending the Qualitative Approach against the Quantitative Obsession

image credit: http://j.mp/NsbeVj

Some years ago I studied Sociology and General Linguistics at the University of Zurich. That was before my time as a software tester and I enjoyed Sociology a lot. Well, at least part of it. Quite interestingly, Sociology and - in my observation - many of the human sciences, display a minority complex towards the so called hard sciences such as Physics.

This leads to a sad obsession. Make everything quantifiable.

But I was much more interested in qualitative studies. There is a brilliant sociologist from France - Jean-Claude Kaufmann - who studied and described many of the deeply human activities and behaviors. For instance, how men behave on beaches where women do topless sunbathing. The book: Corps de femmes, regards d’hommes - La sociologie des seins nus (women’s bodies, mens looks - the sociology of naked breasts). A fascinating read!

Also, one of my lesser known heuristics is, that men with extravagant mustaches are interesting people who have captivating stories to tell. Jean-Claude Kaufman has an extravagant mustache. Judge yourself:

On the other hand, I have always found that quantitative Sociology has only boring stories to tell. Its findings tended to be things that everybody already knew. There is hardly any discovery. Not even mentioning the fact, that the whole complex of validity of what has been found out through measurement, leaves some questions open. Measurements often give a false sense of certainty.

Our good old friend Availability Bias enters the scene: "if you can think of it, it must be important”. And we are already deep in the domain of software testing.

It is not difficult to count something and put that counting result into relation to something else. And - hey! - we are already 50.4576% done. Only that this has no relation to any relevant reality. It is utter nonsense. It is dwelling in fantastic la-la-land. And our users couldn’t care less about 50.4576%. They want a joyful experience while using our application.

Peter Drucker - the famous management man - once said: “If you can’t measure it, you can’t manage it”. A simple sentence that stuck in the simple brains of too many simple contemporary managers. That statement is of course not true, as every parent on earth knows from empirical experience. You do not quantitatively bring up a child. You don’t draw progress charts. You tell stories and pass your time playing and laughing.

But because many managers are too busy collecting meaningless data, they no longer find time to read books and have missed that Peter Drucker later in his life had serious doubts about his initial statement. It is not only us testers who suffer from that lamentable laziness.

I’d rather go with Albert Einstein instead: “Not everything that can be counted counts, and not everything that counts can be counted.”

Eating my own Dog Food and thereby Wandering off from Time to Time

image credit: http://j.mp/M6d8JY

Some time ago I posted a set of questions. Some of my fellow testers posted their answers. Some of them were really good. Let’s see what my own reasoning is.

1. Is there a moment when asking questions becomes counter-productive?

a) If yes, when exactly and what does happen then?

b) If no, how do you know?

This immediately triggers a follow-up question. What do I mean by ‘counter-produtive’? A productive outcome - after having asked a question - would be: 1. the question resonates with the receiver and 2. generates an answer that helps the questioner to reduce uncertainty within the context of the question asked.

By ‘resonate’ I mean the receivers willingness to give you an answer. By ‘reduce uncertainty’ I also include an answers like ‘I don’t know’ or ‘ask somebody else’.

A counter-productive question either produces a failure with the former or the latter or both. In order for a question to resonate, the receiver needs to be ready to listen to you. If the receiver is in a mental state which incompatible with your need for an answer, the question becomes counter-productive. There is no use in asking anything if the receiver is stressed, does not feel competent or if the receiver is in an annoyed state of mind.

Some questions produce surprising answers. It may not be what you expected. Given that condition 1. is met, you should continue with refining your question until a productive answer is given.

In my perception the context-driven crowd describes itself as above average smart. This can result in asking questions just for the sake of it. It can be observed regularly on e.g. Twitter. I am guilty of it myself. I think we should exercise good judgment when asking questions. Am I just asking because I like my über-smart question, or do I really want to know?

2. Does puzzle solving make you a better tester?

a) If yes, what exactly is the mechanism?

b) If no, is the effect neutral or negative?

My answer to this question is: I don’t know. It could be the other way round. Good testers just like to exercise their brains and therefore like to solve puzzles more than others. Deliberate practice results in mastery.

Now, a good puzzle often asks for lateral thinking skills. Lateral thinking skills are good for general problem solving. And there is a lot of general problem solving in testing. Hence, exercising on puzzles might help to become a better tester.

3. Should we bash certified testers who are proud of their certifications?

a) If yes, what do we want to achieve with that action?

b) If no, why do we let these people spread ideas about bad testing?

No, we should not. There are many reasons why somebody has a certification and maybe it was hard work to obtain it and the hard work result in the tester being proud of his/her achievement. Also, attacking people hardens the relationship and certainly does not change the opinion of anybody.

What we should put our energy in, is, in the following order: 1. set a good example ourselves by demonstrating what good testing is 2. argue against the certification and the certification providers. The certification industry is driven by monetary ambitions and not by the urge to enhance testers’ skills. That is what we should point out. We should not let the certification industry spread bad ideas about testing.

I very much believe in nurturing positive alternatives. Let us show what good testing is and let us concentrate on building a valid alternative to certification. A tester generally has two possible paths: Be employed or be independent.

When a company hires a tester, they want to know if he or she can do the job. Current certification schemes not being an option, then how exactly do we meet that need? Peer certification? A general ‘reputation score’? How? I do not have a good answer to that.

4. Is having a high intelligence level a prerequisite for being a good context-driven tester?

a) If yes, what definition of intelligence is applicable?

b) If no, how can it be substituted and by what?

Software testing belongs to the knowledge worker domain. The acquisition of knowledge depends on your ability to do so. The Cattell–Horn–Carroll theory lists ten general areas of intelligence:

- Crystallized Intelligence

- Fluid Intelligence

- Quantitative Reasoning

- Reading & Writing Ability

- Short-Term Memory

- Long-Term Storage and Retrieval

- Visual Processing

- Auditory Processing

- Processing Speed

- Decision/Reaction Time/Speed

If you go through this list, you will probably agree that all are applicable to software testing to some extent. The better you are in each of these dimensions, the better your testing will be.

5. Is it true that many tester struggle with what a heuristic and an oracle are?

a) If yes, what is your explanation that it is so?

b) If no, where is your data?

What is an apple? It is a fruit that grows on trees. It is round-ish, edible and has either a green, yellow or red color or a mixture of these. And here is one. Have a bite.

Some things are easer to understand and to explain to others. Other things - and especially concepts - are more difficult. Generally, the more abstract a concept, the more difficulty people have with understanding it.

So, yes, heuristic and oracle are abstract concepts and therefore more people struggle with understanding what they are than with understanding what an apple is.

6. Can YOU give a quick explanation to somebody who doesn’t understand the concept?

a) If yes, how do you know you were understood?

b) If no, what part are you struggling with?

Ahem, let’s try a definition without referring to the existing ones of e.g. Michael Bolton:

Heuristic: A problem solving strategy that produces an answer without guarantee of neither its absolute correctness nor its best suitability nor its applicability for the task one is confronted with.

Oracle: Any valid reference used by a software tester in order to evaluate the observed with the desired.

And now comes the disclaimer: By just giving these one-sentence definitions I would not have any guarantee that I was understood. In order to know that, I would have to be in a dialogue for a longer period and observe if I was really understood. So, no easy and quick path here.

7. Is there a subject/topic that has no relevance whatsoever to the context-driven software tester?

a) If yes, can you give an explanation that entails detailed reasons of its inapplicability?

b) If no, how come?

I do not think so. There is the fantastic power of analogies. You can take any A and B and make a connection through an analogy. In my experience it is very fruitful to sometimes force analogies. When trying to connect two domains that appear to not be connected at all, the outcome can be rather surprising. Deliberately forcing analogies more often than not results in surprising insights and new ideas.

Goodbye my Old Friend Phonak; and Hello my New Friend eBay

It is one of those rare events; at least for me who is not a job hopper. There are a couple more minutes left to officially being employed with my old employer Phonak AG, with whom I have proudly spent the last almost eight years. And then I will equally proudly become an eBay man. Yet, the transition feels odd.

PHONAK

In December 2004 I was studying at the university in Zurich and the only reason I started to work with Phonak, was, that I was in urgent need of money. How could I have known that it was the start of a career in software testing. And how could I have known that I would get seriously hooked with the context-driven school. James Bach, of course, was not at all innocent in all this.

Phonak has been an excellent employer for me and I would especially like to thank my former boss - Philipp - for everything he has inspired in me. Actually, why don’t you just have a look at his recently started blog to see what a marvelous free thinker a software development unit director can be. Philipp is a remarkable person, who, with his razor-sharp analytic abilities, yet relaxed compassion with people, was a pleasure to work for. May milk and honey always flow in his general direction.

And now the time has come for a change.

EBAY

I have been a line manager for a total of 13 people. It has been rewarding and on the other hand it does not leave a lot of time to do actual testing. As I have written in a post a while ago, it has been something I wanted to change. eBay has miraculously offered me this opportunity. I can have the best of both worlds, still doing line manager work by enabling people to achieve their best and also doing hands-on testing. How great is that!

It will be fun to read this post in a few months when I know more about eBay. So, what do I aim for within eBay?

My goal is nothing less than making my group of testers world class. And by world class I mean a team of fantastic individual personalities that is the envy of every other company. One that has sparkle in their eyes when they talk about software testing. One that inspires. One that understands world class as not being the end of a journey but constantly being on the road in search for excellence. One that every other manager would hire on the spot, only that none in the team would be interested for any price in the world, because they cannot have a better place to work for than eBay. One that has a smile on their faces.

This is bold, yes, and I would like to end this post with some beautiful sentences by a great man:

With this in mind - dear eBay - let’s see what I can do for you. I am looking forward to getting to know you all. See you on Monday.“Impossible is just a big word thrown around by small men who find it easier to live in the world they've been given than to explore the power they have to change it. Impossible is not a fact. It's an opinion. Impossible is not a declaration. It's a dare. Impossible is potential. Impossible is temporary. Impossible is nothing.” - Muhammad Ali

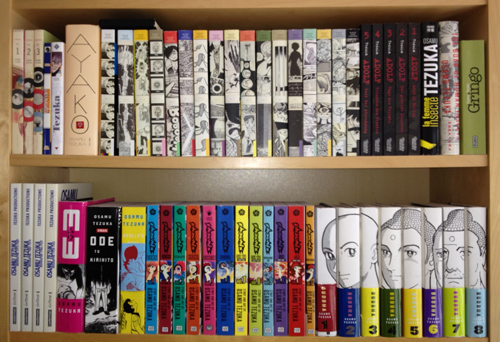

What we can learn from the great Osamu Tezuka

(IMPORTANT: If you think comics are for children, please contact me on Skype before you continue reading. I’d like to have a clarifying chat with you. My ID: ilarihenrik)

Osamu Tezuka has drawn a lot in his short life that unfortunately lasted only 60 years. The mangas and gekigas above are from my Tezuka section and they represent only 8.2% of what he has created in his lifetime. Osamu Tezuka is one of the most incredible practitioners, not only by the standards of the manga industry.

We test software, Osamu Tezuka draws stories. Is there anything to learn from the great man? Or is this just some forced analogy? Maybe, maybe not. Let’s see if there is something we can make use of.

Do it every day

Osamu Tezuka has drawn every single day of his life. There was no day where he just sat around idly. He has drawn more than 700 tomes of manga with a total of about 150’000 pages and he was involved in countless films as well.

Shouldn’t we consider practicing our craft every single day? What have YOU done software testing wise today? Regular practice is the key to greatness.

Be a good story teller

Reading Tezuka makes you aware of his incredible story telling abilities. Some of his mangas stretch over more than 2500 pages (e.g. the great Buddha series — on the picture above on the lower right corner), and there are simply no passages that are boring. His stories are fantastic.

In order to practice bug advocacy, you essentially need to be a good and convincing story teller. Testing still often needs to be “sold”. The better you can come up with a convincing story of why it is important, the better your life as a tester will be.

Assemble people who help you

In order to keep up the pace, Tezuka had a big group of people who helped him with his stories by doing the blackening or the application of patterns or drawing the less prominent characters in the background. Tezuka acknowledged that he could not do everything himself and that it is helpful to have friends in his proximity who not only helped with the texturing but also criticized his work.

I believe that is something which makes the context-driven school of testing very strong as well. We tend to watch ourselves and give constant feedback while pushing ourselves to become better every day. It is a value we should be aware of.

To have some additional educational background is helpful

It might be a lesser known snippet of fact that Osamu Tezuka was a medical doctor. Although he never practiced as a physician, he has actually graduated as a doctor. This resulted in him being able to draw physiologically correct and fascinating details in some of his stories. I can highly recommend his collection of short stories about the outlaw doctor Black Jack. These short stories are outstanding. And they are outstanding because Tezuka included a second skill into his art. The fusion resulted in something far more remarkable.

That’s why it would make sense for us testers to educate ourselves in a wide variety of other topics. We should read about social sciences, immerse ourselves in ethnological methods of qualitative data gathering, psychology, cognitive sciences and of course mathematics should become our friends. And a lot of other matter, too.

Not being certified allows you to be great

Ha! Who would have thought that Tezuka is with us in this regard. Again, his outlaw doctor Black Jack is NOT CERTIFIED. And he does not even want to be certified because he has trained his skills to become the greatest surgeon on the planet.

Mushi Production

Well, the translation of the name of his animation studio - Mushi Production - is: bug production.

(An irrelevant yet interesting side note: While writing this post, my editor constantly tried to correct “mangas” to “mangos”, which I found hilarious because of my automatic spell checker’s complete unawareness of context)

Major Consensus Narrative, Asking Supposedly Hyper-Smart Questions and Being Context-Driven

image credit: http://j.mp/JSuYkO

As the title might suggest, there is a mix of different ideas and questions in this post. We may even have Virginia Satir coming for a short visit. But at the end you will see - I hope - that everything is connected.

Very recently

I am not the only one who is convinced that reality is a social construction. There is no truth as such in pure form. Or as the saying goes, there are 4 truths; mine, yours, “The Truth” and what really happened. For those among you who understand German, I highly recommend episode 23 of the excellent Alternativlos podcast. You may listen to “Verschwörungstheorien, die sich später als wahr herausstellten” here.

What concerns me, is the major consensus narrative. It is what the majority has agreed on to be true. To link that to the context-driven school of software testing: Are we in love with the idea of having found “The Truth”? Do we run in danger of reciting self-enforcing mantras that might be wrong? How do we know if we wander astray?

These are important questions that need to be asked. Martin Jansson - on the other hand - reported on his test lab experience at Let’s Test conference and told how some of the more seasoned testers almost brought his efforts to a halt by not stopping to ask questions. Paul Holland told the story of James Bach having become an unstoppable questioner at a peer conference.

Is it always good to ask questions? How long and how many? When do I stop? Is it a “Just because I can” attitude to prove that I am hyper-smart? I have a potpourri of questions I recently asked myself:

1. Is there a moment when asking questions becomes counter-productive?

a) If yes, when exactly and what does happen then?

b) If no, how do you know?

2. Does puzzle solving make you a better tester?

a) If yes, what exactly is the mechanism?

b) If no, is the effect neutral or negative?

3. Should we bash certified testers who are proud of their certifications?

a) If yes, what do we want to achieve with that action?

b) If no, why do we let these people spread ideas about bad testing?

4. Is having a high intelligence level a prerequisite for being a good context-driven tester?

a) If yes, what definition of intelligence is applicable?

b) If no, how can it be substituted and by what?

5. Is it true that many tester struggle with what a heuristic and an oracle are?

a) If yes, what is your explanation that it is so?

b) If no, where is your data?

6. Can YOU give a quick explanation to somebody who doesn’t understand the concept?

a) If yes, how do you know you were understood?

b) If no, what part are you struggling with?

7. Is there a subject/topic that has no relevance whatsoever to the context-driven software tester?

a) If yes, can you give an explanation that entails detailed reasons of its inapplicability?

b) If no, how come?

I have my answers to the questions. But, please, my friends, post YOURS in the comments below. I would love to see a variety of reasonings.

You may have asked yourself at the beginning of this post what the fragment “Very recently” was doing there without culminating in a full sentences. That is a valid question. You might even have formed a hypothesis about what it was doing there. “Just an editing error”, “probably a section title”, “haven’t noticed”, “maybe it is clickable”, “semantic ambiguity of hypnotic language” could have been some of the guesses.

I promised in the entry sentences that at the end everything will be connected. Actually, it is not at all; this post is very messy. Please, don’t shout at me because of that. And Virginia Satir might be in one of the next posts. I’d definitely like to talk about her.

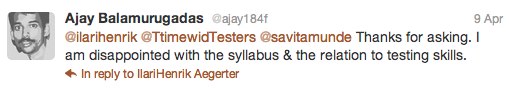

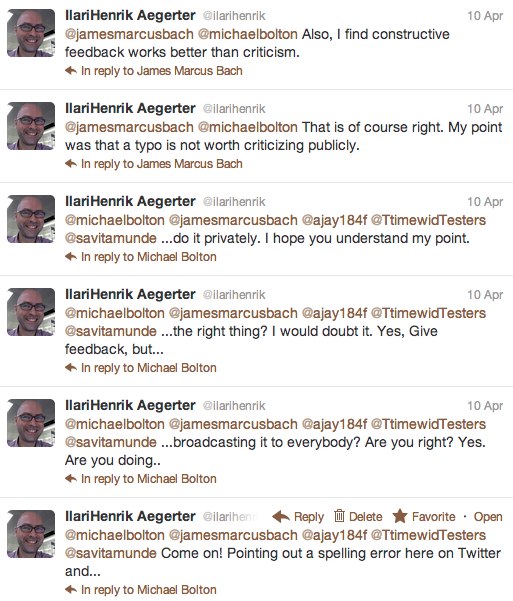

Thou Shall Test if you Catch yourself Assuming

image credit: http://j.mp/HAv17F

The best lessons to learn are the ones that happen to yourself. This week, I experienced a schoolbook example of such a thing. I was making a bunch of assumptions and didn’t care to verify them. Today, I shake my head in disbelief.

What happened?

On Monday, 9 April, Ajay wrote in a short tweet that he was disappointed with the syllabus of an online course. The course was published here:

http://www.qualitytesting.info/forum/topics/certifications-might-get-you-job-but-only-your-testing-skills-wil

and the syllabus here:

http://qualitylearning.in/software-testing-syllabus

(it has already changed to the better in the current version)

I then asked him what he meant by “disappointed”. I was also shocked because I knew that the online course was supposed to be given by Savita Munde whom I hold in high regard as a serious tester. I have been coaching Savita for some time and I just couldn’t believe what was happening.

Both James Bach and Michael Bolton followed up with their own tweets supporting Ajay. I became angry and wrote back:

Following that I had a back and forth discussion by e-mail with James Bach where I tried to defend Savita. My point was that since she is a serious tester she cannot be criticized like that. I couldn’t stop. I was angry.

Apparently my thinking was shadowed as well. All the time I did not talk to Savita directly although it was exactly what I should have done. In the meantime the syllabus was changed and when I eventually talked to Savita she told me that the syllabus was a mistake and not published by her.

Always test your own assumptions

I am glad this happened to me because it taught me again that I need to be on guard against my own assumptions.

BTW: Read also Ajay’s blog post about it. I bow to him for his deeply human and fair reaction to the whole story.

After some iterations of thinking I came up with the following decision graph. It may be of great help the next time I make an assumption. The good thing is that it is quite simple.

Has something similar happened to you? Tell me in the comments below. I am very much interested.

There Wouldn't Be any Fat People Left if the Machines finally Took over

image credit: http://j.mp/Aoky2E

Recently I saw three enormously fat men standing close to each other and the feature that caught my eye was their huge bellies all three guys put on display for the world to see.

If they had moved a tiny bit closer, the bellies would have met with a gentle touch.

They did not do that but they were discussing stuff. They were chatting and telling jokes. In short: they were being human with all the pleasant properties as well as the flaws humans tend to have.

Test automation hasn’t got a belly at all. Neither does it crack jokes. Why should it? It would not be of any use. Test automation hasn’t got any humor but it is lighting fast and incredibly accurate.

It is kind of obvious: Humans and computers are different. Both are good at some things and have their shortcomings somewhere else.

You certainly know testers who say that test automation should not be considered at all for testing. They say that it does not help doing a tester’s job and by saying that they are - in my opinion - fundamentally wrong.

I am sometimes amazed by the inaccurate thinking of some people. Just because test automation cannot do some things it does not mean it cannot do anything. That’s like saying: “This car is useless, it cannot fly”.

What test automation is good at:

- Fast checking of huge amounts of data that is prone to change and therefore to errors

- Doing repetitive procedures over and over again

- Access code that is not easily accessible through the UI

- Do basic sanity checking on an regular intervals

Test Automation is very, very good at the above. Beer bellies aren’t.

The excellent book Mind over Machine by Hubert L. and Stuart E. Dreyfus describes it accurately:

Computers are general symbol manipulators, so they can simulate any process which can be described exactly.

On the other hand humans are splendid observers even if they don’t anticipate beforehand what they are going to discover. A tester who finds a bug during an exploratory testing session and who is asked how he/she found a certain bug will sometimes reply: I don’t know. Finding a bug without exactly knowing how it was done differs enormously from the definition of a computer above.

What humans are good at:

- Collateral observation

- Rapid change of direction if new information demands it

- Acquisition of knowledge in general

- Taking advantage of the wisdom of crowds

- Using intuition

Beer bellies are very, very good at the above. Test automation isn’t.

I think our western culture is very much imprisoned in a dichotomous either-or-mindset. There seems to be a need for a constant opposition between two positions. Hence you either do manual testing OR automated testing.

This is a silly standpoint. Use both. And do it the same way a good manager distributes tasks among his different directs. Every task according to the individual strengths of the people.

There wouldn’t be any machines left if the fat people finally took over. And this is also true the other way round. It would be a sad world.

Stay Calm, Get another Beer and Keep on Thinking

image credit: http://j.mp/z6Y8PL

This week there was quite some ruckus about the alleged passing of the context driven school. All this was caused by what Cem Kaner wrote on the about page on www.context-driven-testing.com:

Oh, no! We have lost our safehouse where we found warmth and shelter. Now we insecure testers are again out in the cold and wandering about aimlessly. Let us therefore all shut down our brains and immediately join the factory school.However, over the past 11 years, the founders have gone our separate ways. We have developed distinctly different visions. If there ever was one context-driven school, there is not one now. Rather than calling it a “school”, I prefer to refer to a context-driven approach.

Honestly, I am surprised by this eruption of un-coolness. Cem just decided to do something else. He is a free man. He can do whatever he wants. That is not a problem at all. A school does not just disappear. Maybe if it is a school-“building” and you - let’s say - blow it up with a few strategically placed sticks of dynamite. And even then it does not completely disappear. On the other hand schools of thought are immune to dynamite and very rarely disappear just like that. And even less so if there are many people still acting according to the principles every day.

What is a school of thought?

A school of thought is a collection of people who share the same or very similar beliefs about something. So as an example: Zen Buddhism is a school of the Mahayana branch of Buddhism. They share some beliefs. And the context-driven school consists of people who subscribe to the following beliefs:

The seven basic principles

- The value of any practice depends on its context.

- There are good practices in context, but there are no best practices.

- People, working together, are the most important part of any project’s context.

- Projects unfold over time in ways that are often not predictable.

- The product is a solution. If the problem isn’t solved, the product doesn’t work.

- Good software testing is a challenging intellectual process.

- Only through judgment and skill, exercised cooperatively throughout the entire project, are we able to do the right things at the right times to effectively test our products.

How could these principles possibly have become invalid just because 1 person (albeit an important one) wrote the school is no longer. And now comes the crucial point: That is not even what Cem wrote. He wrote “If there ever was one context-driven school, there is not one now”. This only states that the founders have developed differently over time and may have even been different from the beginning. Not so surprising. I do not know of any two people with redundant brains. Well, maybe Thomson & Thompson from Tintin. But that is the comics world.

Stanislaw Lem said it perfectly in his advice for the future: “Macht euch locker und denkt ein bisschen!” (Take it easy and think a bit)

Therefore here is my advice: “Stay calm, get another beer and keep on thinking.”

BTW: Almost all domain name combinations of www.context-driven-school.com / .net / .org / .etc are still available. I am sure somebody among you has a good idea what to do with it.

How Should I Know how Testing Is Going to Be in 20 Years?

image credit: http://j.mp/y6XGfm

Many people have wanted to look in the future and see how things are going there. This is what I am about to do right now. I can tell you exactly how software testing will be in the year 2032. Want to hear more? Ok, here we go:

It will be different

I have to admit that this was kind of anticlimactic and maybe even a bit disappointing to the ones among you who are always keen to get all the nice little details served on a dinner plate and spoon-fed to your mouths. Sorry, I have to let you down. Well, to be honest, no, I am not really sorry.

Go back to 1992. Almost no internet. No smart phones. Very few testers. Mostly highly scripted test cases in waterfall processes. Who would have thought how today’s testing world would look like. Although, this video is kind of interesting. (As a side note: it also reflects the role thinking of 1969.)

Assuming that there is an acceleration in the development of new technology and a somewhat less speedy development on the human side it would be crazy for someone to “know” about the future. This highly dynamic and complex system is not very transparent for crystal ball predictions. So, don’t fool yourself into believing that is possible.

What is thinkable?

Computer science realizes that it is inherently a human and social science. Therefore a lot of thinking will go into how teams can collaborate better. The stereotype of the non-communicative nerd developer will eventually prove to be wrong.

Software development practices most certainly evolve in a direction that becomes even less error prone. Future compilation mechanisms will prevent many more of the errors from happening. Hence, less of the “stupid” sort of bugs. That means it will be more difficult to find bugs.

Testers on the other hand will have fully understood the concept of applied epistemology and will use a rich set of heuristics during every testing activity while having stopped completely to whine about their poor state of affair.

Hm, some spacey element is still missing here. Ahm, ok, let them all have a huge gadgetry of enhanced reality goggles and exoskeleton support with intelligence enhancing psycho pills implanted directly in the brain. And maybe find use for some of these, too. Or something the like.

What won’t happen?

Everybody who thinks there won’t be any testers in 20 years is indulging in crazy talk. No, we won’t have everything automated because thinking cannot be automated and therefore the design of automation needs human brains. Ideally, brains with a tester’s mindset. Running automated tests is checking, not testing.

Automation is important, too, but it is not everything that is needed for wholesome testing. And just think of it: Writing code in 2032 will still be a very, very manual process in the deepest sense of its meaning, assuming that developers still use their hands to write it.

What can we wish for?

I would wish that testers in general develop a pride for their craft. Whenever somebody with the title “tester” says she/he hasn’t read anything about testing it will be as if somebody was swearing filthily in a church while the pope himself is present. Unheard of.

So what is my message here?

I think it would make sense for all us testers to spend some time reflecting on where the journey is about to go. On the way we should all keep learning new stuff and keep our senses alert and our brains busy. Let’s learn new stuff every day.

This morning testing was somewhere, then the day passed and already now it has progressed to the next stage. I am very curious to know how it is going to be in the future.

What’s your view? How do you think software testing will evolve over the next 20 years? If you could influence it, what spin would you give to software testing? What is it you would like to see more? What less? Tell me more in the comments below.

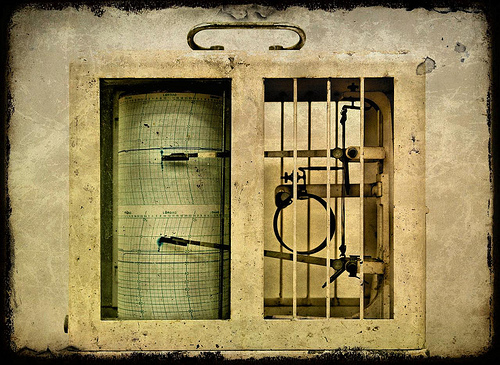

Bug Tracking Disorders May Result in Compulsive Counting

image credit: http://j.mp/wRWveO

Interviewer:

So, how do you handle your bugs?

Interviewee:

Finally, we don’t have to talk to each other anymore. Why should we? We have a bug tracking tool now. It’s called “Brave New Bug 1984” . Everything goes in there. Even the slightest suspicion is reported. Unit and integration tests that fail result in a report in the tool. That is something we the noble quality police fought hard for. And we have set high goals for ourselves. The next step will be that even if a developer e.g. makes a typo - something like: accidentally typing two closing brackets - he/she can no longer just correct it. It must go in the bug tracking tool. And then we will issue metrics and reports. About every single developer. We exactly know who the bad apples are, and then we can talk to HR directly, and we will fire people, and…[Interviewee starts to salivate]

Interviewer:

Have you lost your mind?

That’s right. This is the voice of madness. And hopefully there is no company that practices bug tracking that way. But - who knows - if it exists and you work there, then do yourself a favor and quit your job today.

Most of us use a tool to track bugs and I have a question: Why? What is the reason to use a tool for bug tracking? Hmm?

Our brains are very good at being creative, socializing with our friends, playing cards and drinking a beer. Not so good at keeping updated lists in our heads. That is where a bug tracking tool comes in handy. It outsources our list keeping. So, the bug tracking system prevents us from forgetting about things. Bugs, in this case.

But does that mean that every single little thing in every single little situation must go in the tracking tool? What about tester feedback from exploratory testing sessions at the daily stand ups in an agile environment? Is it really necessary to have it all in a tool? Maybe so, maybe not. Just decide for yourselves. Maybe even have some of the bugs tracked and others not. I don’t see it as an either this or that game.

Another made up reason for being strict about handling all bugs in the tool could be that you as a test manager are fond of reporting the total number of bugs in a project. “We have found 53’732 bugs during this project”. My reaction to that: “So what?”

Compulsive counting is something we used to do as school boys. In our case we used to brag about being in possession of 53’732 different posters from discotheques. You know, we used to drive around with our mopeds and take discotheque posters off the walls. They looked like this:

Ridiculous, isn’t it? But excused, because a weak beard growth does not really help being sensible.

So don’t become this compulsory counting freak in your company and if you feel like reporting any number then be sure it has some meaning. However, the number of bugs found does not inherently have meaning. It does not say anything about the quality of the product.

BTW: This very old article by Joel Spolsky is quite a good read and still valid.

Whining Testers Are not Fun to Spend Time with

I love what I am doing. If I wasn’t I would do something else. Like gardening or follow a career as a beach bum. Sun tan and a lot of booze. But no, it is software testing and managing software testers and talking to developers and ship tested software that I like.

But from time to time I observe a pattern that seems to be especially endemic among software testers. It is the tendency to whine about one’s own work situation while blaming everybody around us for causing it.

Let’s bullet point what some of the testers I have met at conferences not only think but also say aloud (The last one is slightly modified. Instead of the url’ed word, another one was used):

- I am fine, they are not

- I do a pristine job, they are slobs

- I could be so much better if only they did their job

- I would immediately start testing if only they’d provide me with perfect, unambiguous, neatly versioned, accurate, testable, re-usable, superman-feature-like requirements

- I am a saint, they’re rectal cavities (caution: link not safe for work)

Isn’t it interesting to observe how this follows the usual bipolar structures usually found in western cultures? (e.g. BS like: “Either you’re with us or you’re against us”). Never a continuum nor a trinity. Or calm acceptance without assessment.

Now, please! Software Testing is not a terrible job and the circumstances are not bad at all. In order to illustrate go and read Viktor Frankl’s Man’s Search for Meaning.

I’ll wait.

See, that is a horrible situation. For those of you who couldn’t get the book or cannot read here a short abstract: The book describes Viktor Frankl’s devastating time as a prisoner in Auschwitz and how he managed to not fall in total despair and keep up his spirits to survive his personal disaster.

Whenever you find yourself in a situation, just compare to that. I’ll guarantee that you’ll always be fine.

And here’s a belief shattering secret: The world around you was not built to please your own little egoistic demands.

And here’s another: If you are one of those whining people, your are not fun to spend time with. People will avoid you. Then you will be alone. And even more miserable.

Therefore:

- smile every day

- never develop an inferiority complex

- don’t become a zombie tester

- avoid process freaks

- fight sub standard work ethics where ever you find it

- have a serious discussion with testers not willing to read books or learn anything (I think I am going to have a special post on that)

Let’s finish this post in a little bit more upbeat mood. I propose to go watch this hilariously funny short clip on youtube.

Some Words on Observation Proficiency - Part I

I like Wikipedia’s definition of observation:

“Observation is either an activity of a living being, such as a human, consisting of receiving knowledge of the outside world through the senses, or the recording of data using scientific instruments.”

But, how to become a better observer?

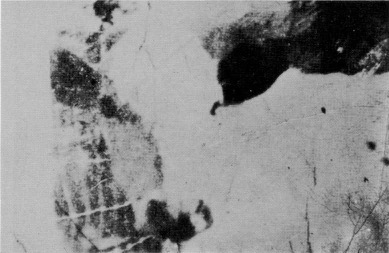

Deliberate practice leads to proficiency, and because I enjoy providing a good service - voilà - here’s an opportunity to hone your skill. What do you see on the picture below?

If you feel like it, you may let me know your solution here.

Games have always been the most efficient (and at the same time most joyful) approach to learning. It is by playing games that kids learn about life. Lumosity is an excellent website that I highly recommend if you want to train your observation skills. I like the games Bird Watching, Space Junk or Top Chimp.

How to Choose a Good Software Testing Conference

Here is my “to be avoided” list:

- Overcrowded with the sales people of tool vendors

- Conference is organized by a tool vendor

- Sessions were selected on the criteria: “what tool vendor is willing to pay for it”

Here is my “oh yeah, great” list:

- great speakers

- interesting formats, like unconference or coach camp

- participation and discussion instead of 45min bloody boring bullet ball battles (yes, I know, it is called bullet point, but it wouldn’t have made such a cool alliteration)

What is it that should happen at a conference?

If you are inspired for action = that is good.

If you learn something new = that is good, too.

If you are inspired, delighted, enlightened, and cannot stop smiling = then it is really great.

Make up your own mind and choose wisely. Maybe you’d like to follow @testevents. They promise to update you on testing events.

Lines of Thought on Software Testing

- Checking

- Verification

- Testing

Is there a difference or is it just irrelevant wordplay? I don’t know, let’s elaborate.

When I hear “checking” I immediately see a boring check list and then I fall asleep. The word “verification” evokes an image of a bureaucrat verifying that all fields on a stupid form are filled in. Now it is “testing” that gets my blood flowing. I mean, looking over the fence to another domain: they are called food testers and not food checkers and their life is filled with glamour and delicious wine. That’s where we want to be, don’t we?

And: There were some famous people named “Tester” such as Desmond Tester, Jon Tester and William Tester. Well, they were not really that famous.

I see checking, verification and testing in ascending order of skillfulness. Checking is just following detailed instruction (e.g. detailed test cases executed by non testers). Verification adds some element of inquiry to it (e.g. detailed test cases executed by testers). Testing on the other hand vibrates in full swing by skillfully questioning the product.

The company I work for calls what my group does “software verification” for the only reason that the term “testing” was already occupied by production. My next mission will be to change the name of my group to “software testing”. And, of course, become better at what we are doing which at the end of the day is far more important.

And here is a good book to start with: Lessons Learned in Software Testing

Some Words on Observation Proficiency - Part II

By looking at it again I can now actually identify at least 2 sleeping women: a big one on top right of the picture and a small one that is lying on the belly and looking in the direction of the observer. Who knows, there might be even more! Just apply some more pattern matching mechanisms and pair it with a little bit of fantasy - and there you are!

We humans could also be defined as pattern matching machines. In my opinion that is how we survived to eventually become software testers. Let’s pause here and say a sincere “Thank you!” to our ancestors who constantly asked themselves: “Could this shade there be a nasty smilodon salivating in my direction?”

Anyway, read Francisca’s comment to the last post to also see the answer I was initially looking for. (And I say “Hello Francisca” here, because I happen to know her)

Now, in this post let’s have a walk-through with how observation and pattern matching of such an image actually works. To start with, here’s the picture:

There are different things happening in your brain now:

Stage 0: Surprise - Oh! Look at that! A picture with a mess of funny dots!

Stage 1: Reflection - Hm, what could that be?

Stage 2: Analysis - Ok, let’s see, there are some black dots and some shadier areas.

Stage 3: Hypothesis - That sure looks like a UFO up there, doesn’t it?

Stage 4: Disillusion - Oh, no! That’s not it. It is just a big mess of dots here.

Stage 5: Enlightenment - Ah, look at that! It’s a Dalmatian sniffing on the floor

And then you’re done!

And what does all this sound like? Let’s translate to an other domain:

Stage 0: Surprise - Oh! Look at that! A nice piece of software!

Stage 1: Reflection - Hm, what could that be?

Stage 2: Analysis - Ok, let’s see, there are some buttons to press and some UI elements to look at.

Stage 3: Hypothesis - That sure looks like an entry field up there, doesn’t it?

Stage 4: Disillusion - Oh, no! That’s not it. It is just a big mess of dots here.

Stage 5: Enlightenment - Ah, look at that! It’s a bug sniffing on the floor

Yes, I know, it’s obvious. This is Exploratory Testing!